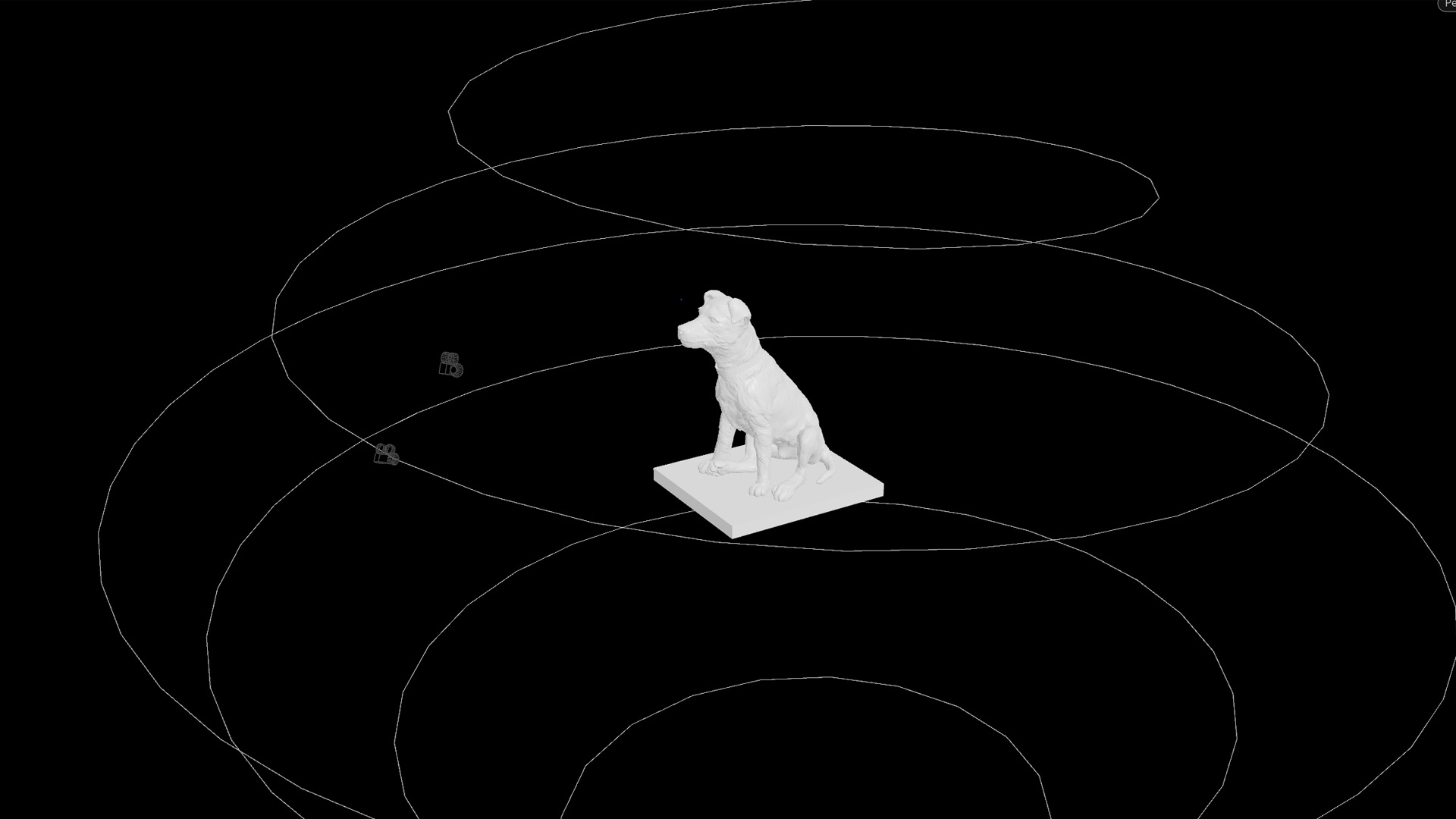

This project uses a 3D model to train a computer vision algorithm to be able to "see" its 3d printed counterpart in the real world. For more context, here is a video of it in action.

It uses a method of transfer learning, or the process of training an algorithm on top of a model that has been trained on real world data.

Acknowledgements:

ML Model: Yolact

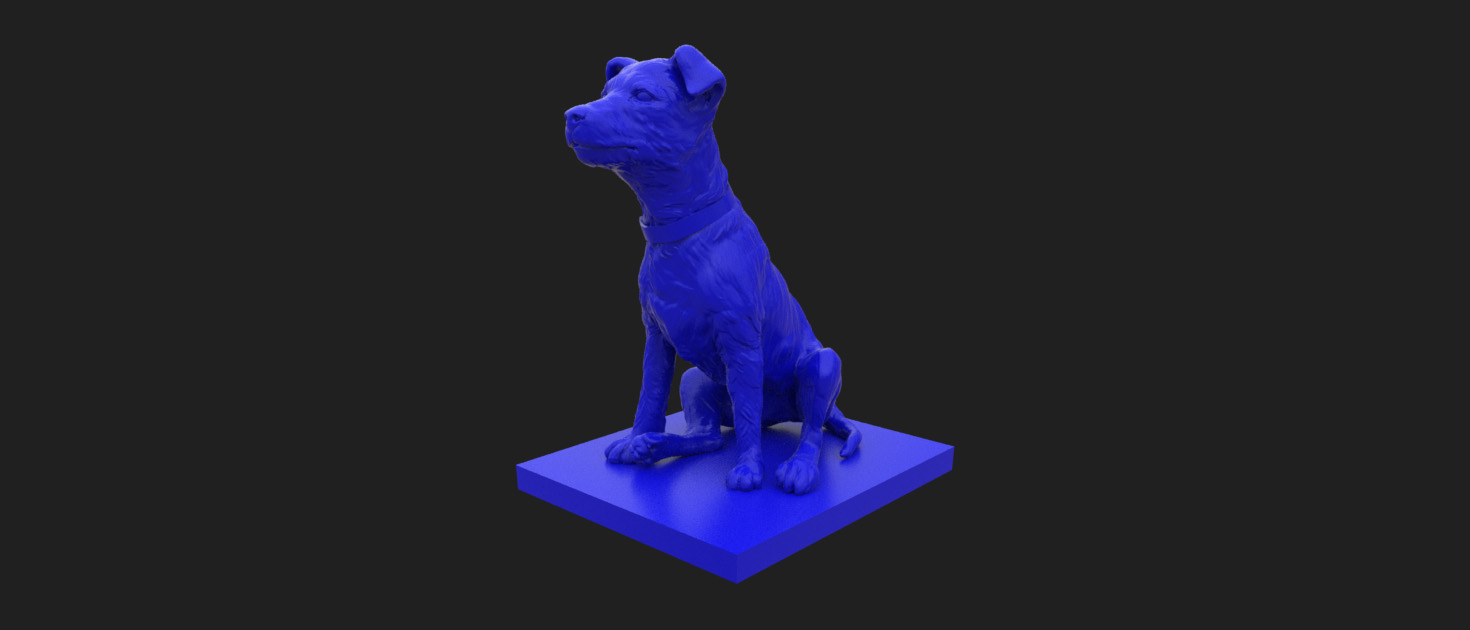

Digital Sculpture Artist: Ben Miller

Immersive Limit Blog: immersivelimit.com

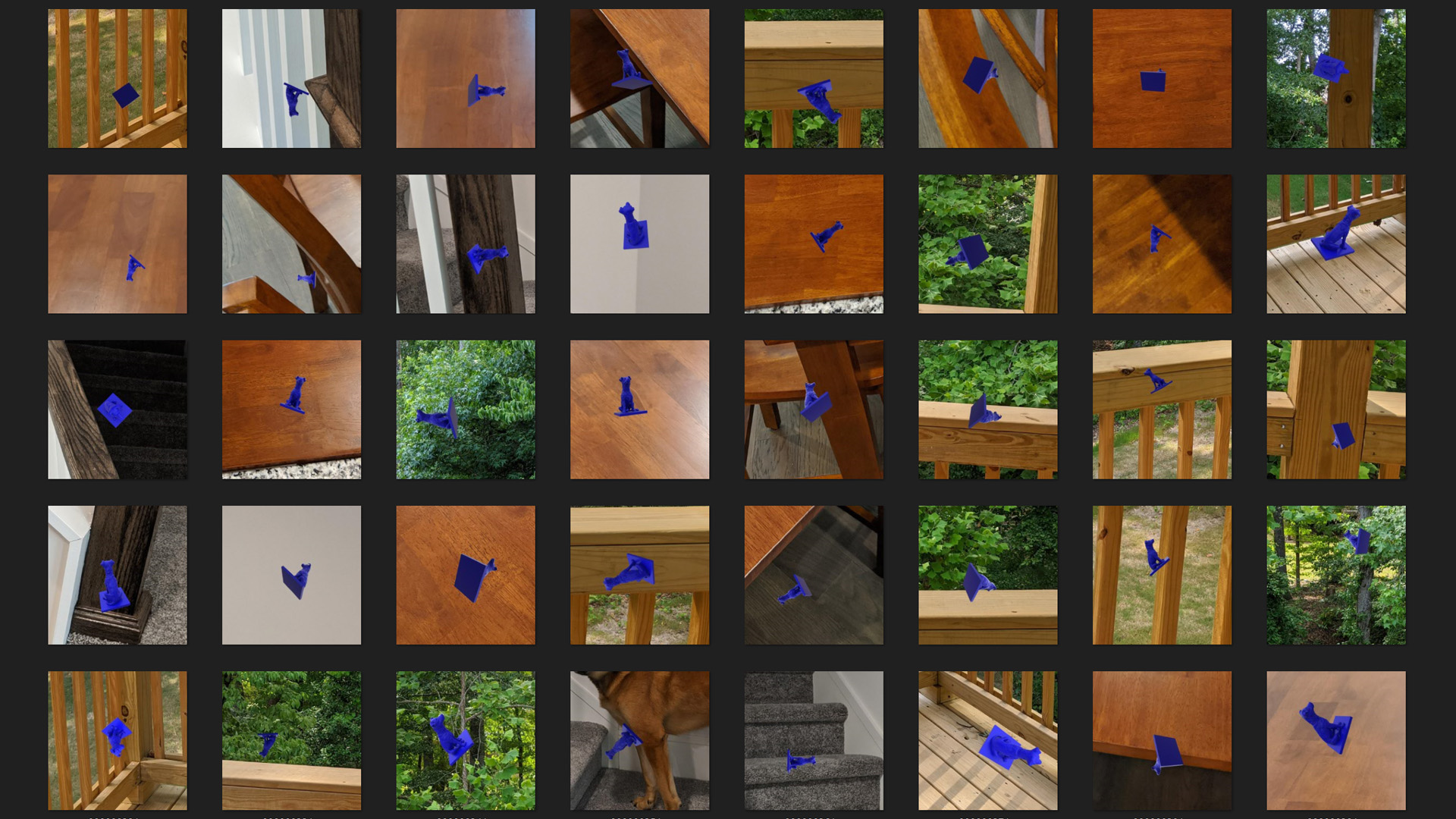

The end result is a computer vision model that can recognize and segment out the subject it is trained on.

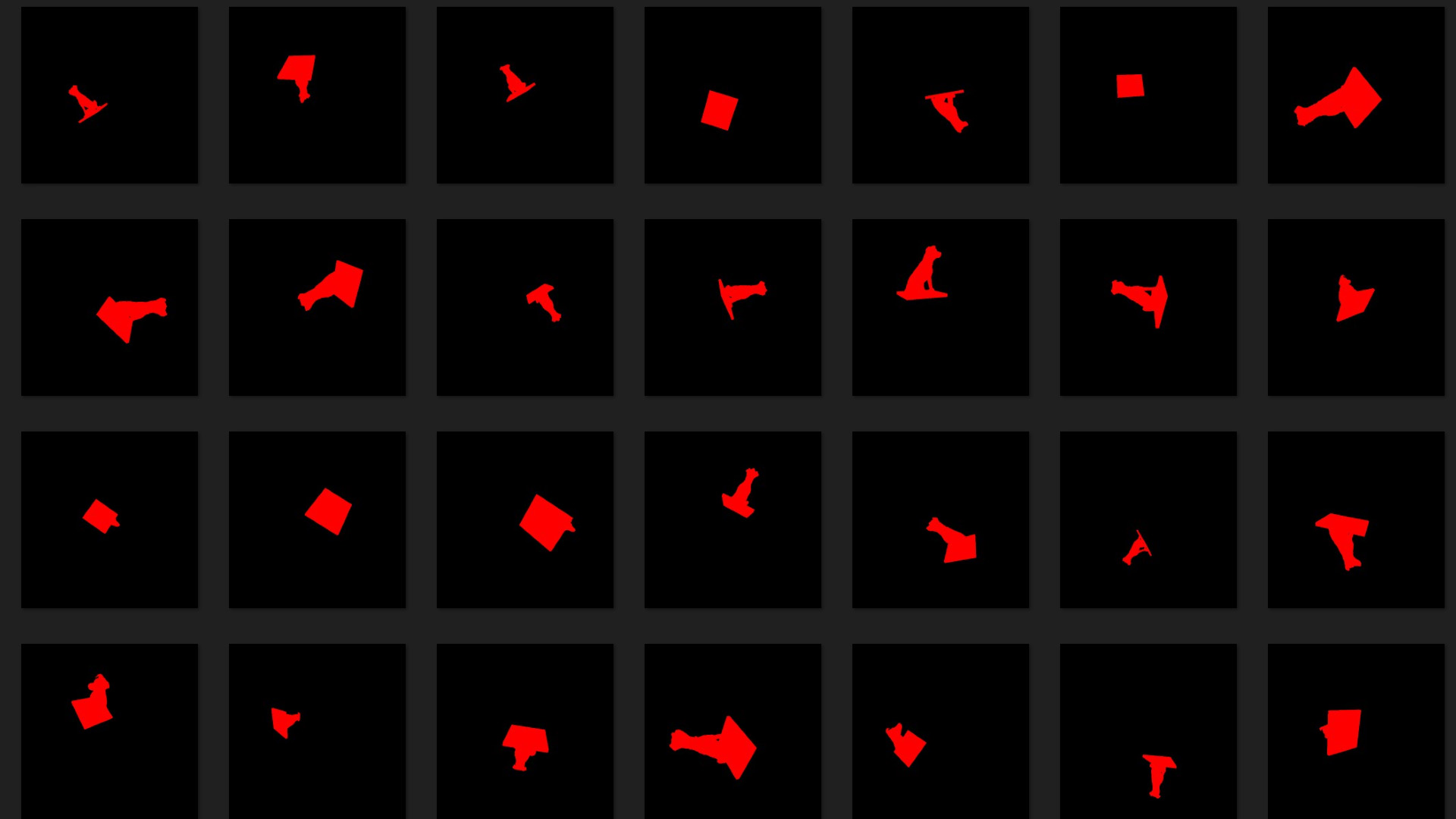

With synthetic datasets we can easily create masks for our objects, to feed to the machine learning model.

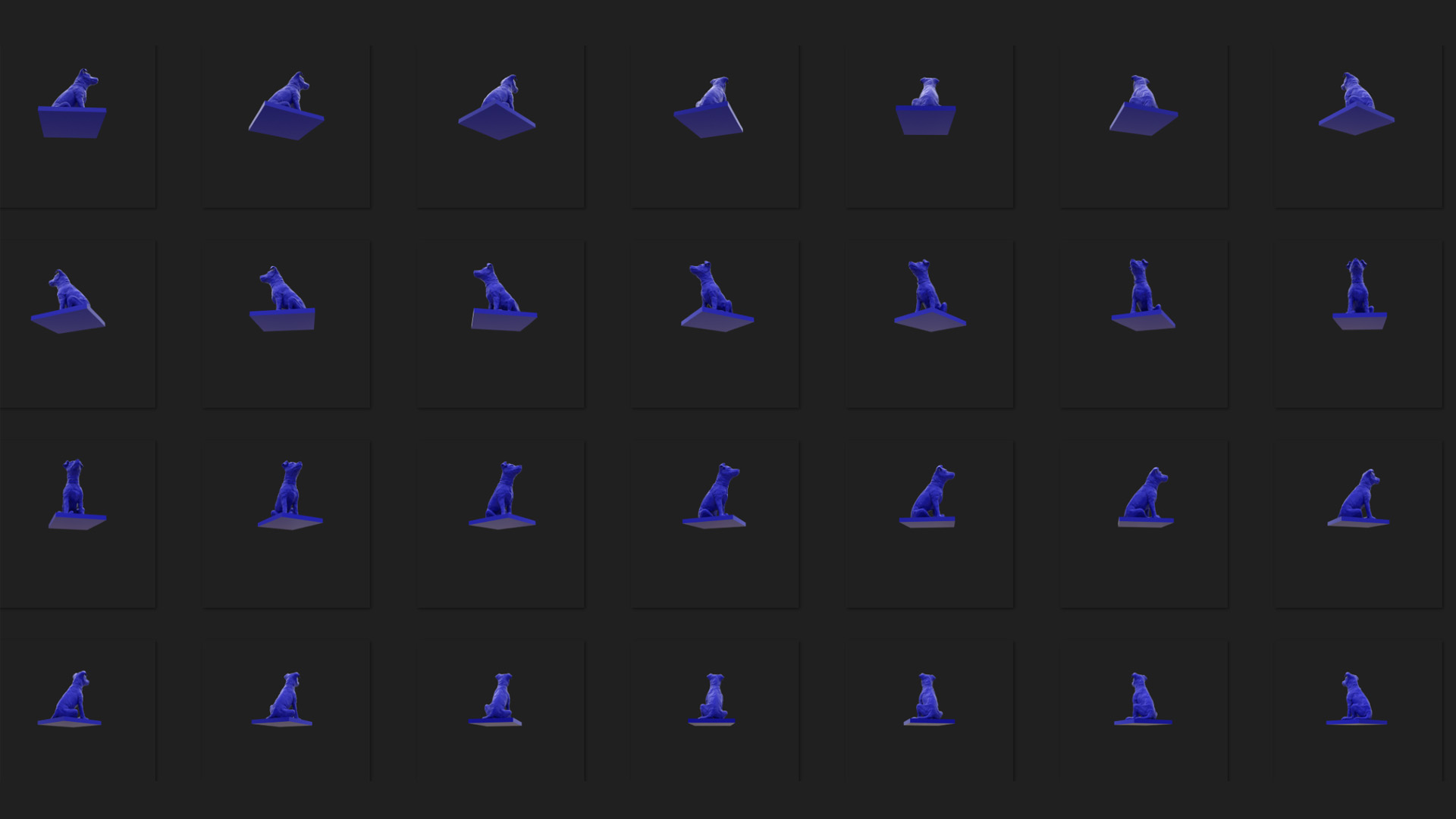

Several thousand training images were created by randomly pasting 3d renders of the digital sculpture over the real world background images.